Shape Immersive

The Company

Shape Immersive (SI) is on a mission to build a decentralized marketplace that will make spatial data universally accessible so anyone can create scalable and persistent Augmented Reality experiences.

They believe Augmented Reality and Mixed Reality would be the next fundamental platform shift, supplanting the multi-touch interfaces of today. Blending virtual worlds with physical ones opens up an entirely new frontier.

The TED Demo

SI wanted to conjure magic using technology; to create awesome lifelike experiences. Mixed Reality is the closest we have to doing that. In order for the magic to be credible, any augmented reality (AR) must be spatially accurate, when it interacts with the physical world. We’ve all experienced sub-par AR where the virtual object drifts into the distance.

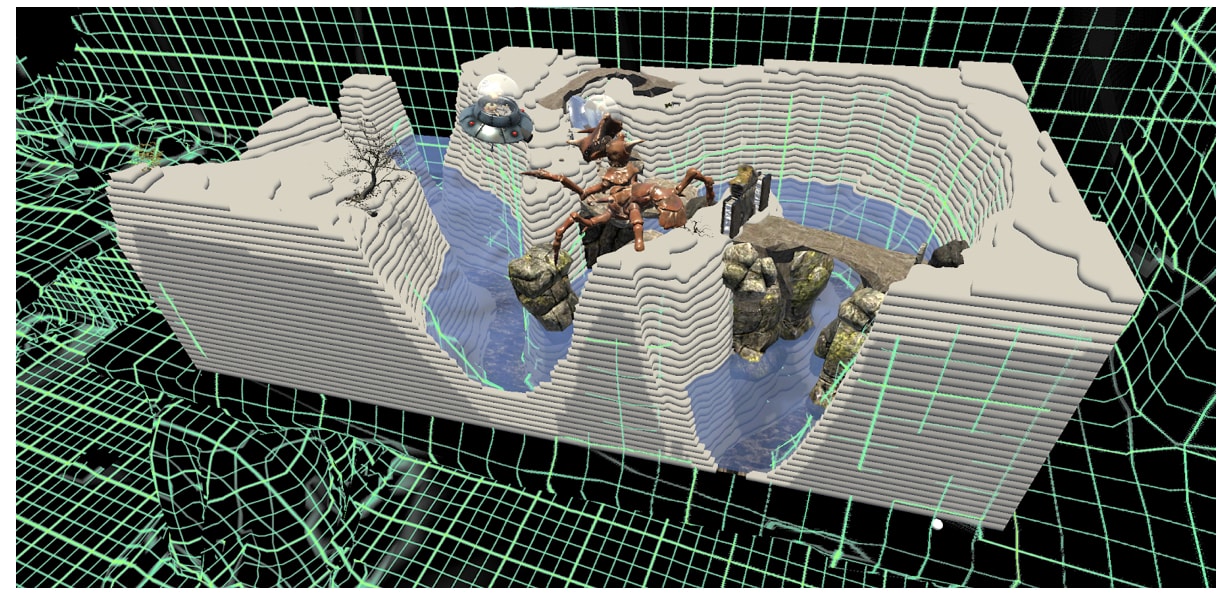

Mitigating these issues meant having shadows and occlusion with real-life objects. Just like magic, we know it’s not real - but seeing shadows being casted from a UFO on your table suspends your belief. The experience we would make will be a lens into another fictional world.

Bridge Engine

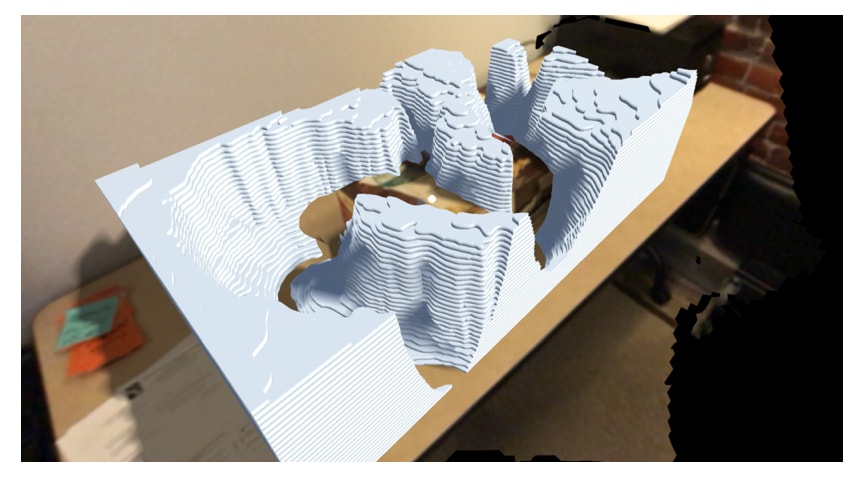

Nano 3 Labs was involved with the software development part, but MR required a physical set. The physical model is a replica of a part of the Grand Canyon called Horseshoe Bend.

Once we had the model, had to translate into physical. Because it was CNC machined, the virtual model was an exact copy of the plywood. The model can then be placed on an iPad using Bridge Engine on Unity.

Bridge is a mixed reality kit that includes a remote, headset, Structure Sensor, and the Bridge Engine SDK. It works to provide stereo mixed reality entirely powered by an iPhone. First, the user scans the space, generating an accurate 3D model of the world they wish to track on. Then, they activate the full Mixed Reality rendering mode that projects a live image from the onboard camera. Virtual objects can cast shadows on, bounce off of, and be covered up, by real physical world surfaces. This gives the user a full view of their surroundings.

Creative Direction

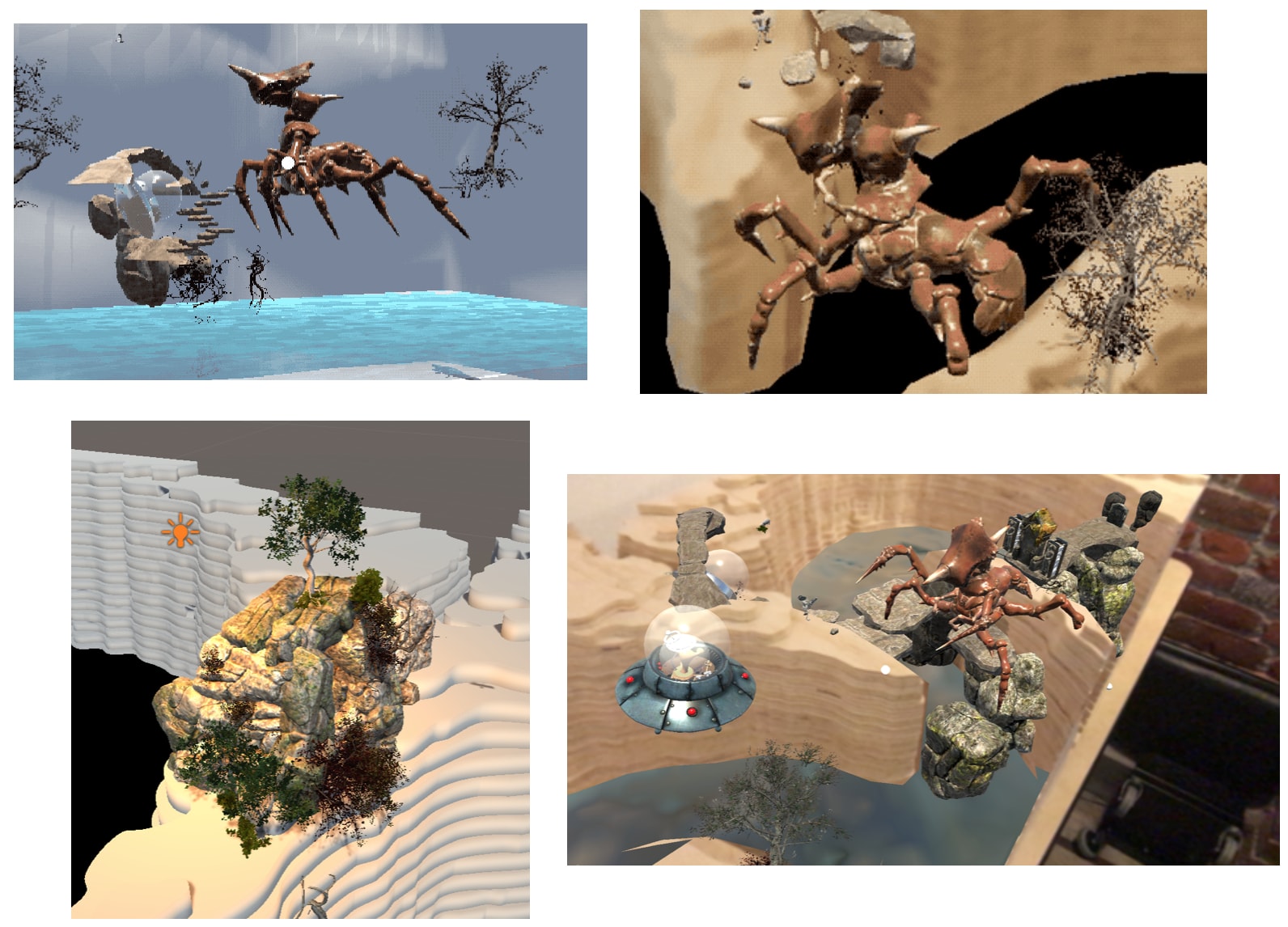

Due to time constraints, no original models have been created for this experience. Instead, off-she-shelf 3D models were composed for the scene. Our final idea was a set called “Great Misunderstanding”. It was between a UFO and a crab monster, fighting each other over territory.

Assets were animated for Unity in Maya and using the “Send to Unity” feature. For this development, we started animating in Autodesk Maya. Later we had to re-animate in Unity to facilitate interactions between the two opposing characters.

Alignment

The virtual model was 1:1 scale with the physical model, but when placed manually inside Unity, it would always be a little bit off. Also, at TED, we couldn’t afford to place the model after scanning, then building again due to time constraints. It would often take 10 minutes or more per build.

The final alignment is done by holding a controller button down while physically moving about the model, and the virtual model follows at 1:10 ratio, moving slowly compared to the physical motion of the camera.

This alignment technique worked simultaneously in all 6-degrees of motion; position (x, y, z), and rotation (pitch-yaw-roll), and worked so incredibly intuitively.

The interactions were built with a combination of C# scripting, Unity Mechanism state machines and animation tracks with event triggers. The final package is generated by Unity, converting C# into a low-level C++ intermediate with project files, and finally built into an iOS app by XCode. We also modified some native Objective-C bootstrap code, to bypass the debug settings after the first scan.

The Outcome

In under one month, we were able to deliver a working demo of the Great Misunderstanding to the TED VIPs through Shape Immersive. We had a great time developing it and even impressed other AR experts along the way.

As TED VIP’s came to check out the experience, they were able to enjoy a god-like perspective and watch the story unfold from all angles. Many were impressed by the variety of virtual objects such as trees, rocks, bridges and animals and how they were able to occlude or stay aligned with the physical model.